Test Development

Department of Psychology and Child Development

Aims in this lecture

- Describe different type of items.

- Introduce basic notions of Classical Test Theory

- Introduce basic notions of Item Response Theory (IRT)

How do we create items

Items are questions included in a survey, personality test, job evaluation, performance evaluation, educational evaluation, or in a program evaluation process.

Before creating new items, you need to ask to yourself?

- What are you aiming to measure? Is it a latent construct or behavior?

- What is your target population?

- Do I need to create my instrument from scratch? Or can I borrow ideas or items from previuos published articles?

- Do I need to create an interview? Is the target latent construct better measured when my instrument is a self-report instrument?

- Should I use likert scales or any other type of item?

- How will you evaluate your items once you collected the data?

Item formats

The Dichotomous Format

- You can also called it a “binary item”.

- These are items where the participant has only two options.

- It could be YES or NO.

- It could be TRUE or FALSE.

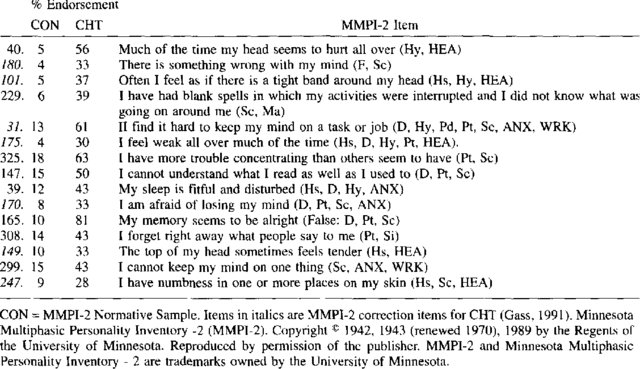

These are examples extracted from the Minnesota Multiphasic Personality Inventory (MMPI) Test:

The Polytomous Format

- It is the most common item in educational evaluation.

- This is an example:

Note

Isabel conducted a study to evaluate if there was an effect of baby formula on total fat in 1 year old babies. In this study, she had two groups, one group were babies fed with baby formula only, the other group were breast fed babies. Which option corresponds to the best model or the best models to analyze the data? (2 points)

Classical Regression Model.

t-test for paired samples.

t-test for independent samples.

Options 1 and 3 would be the best choices.

- Multiple choice items in education have to transform into “Correct”

- or “Incorrect”, at the end we analyze the items as a binary item. Commonly, we code the answers as “1” when the participant answered the questions correctly, otherwise “0” when the answer is wrong. You can see an example here CLICK HERE

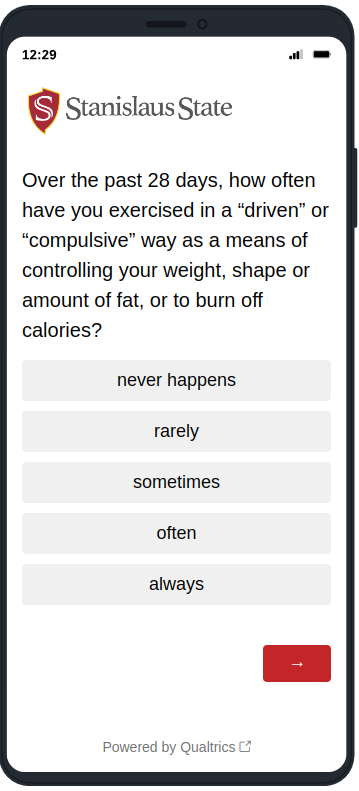

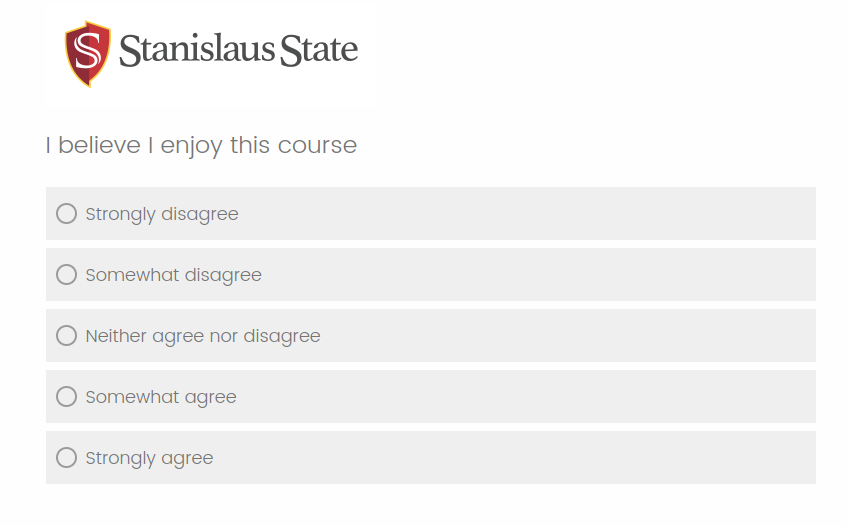

Likert Items

I explained a little bit about it in this slide: CLICK HERE

The main point of Likert scales is to assign ordered numeric values to ratings of opinions, thoughts, beliefs, and attitudes.

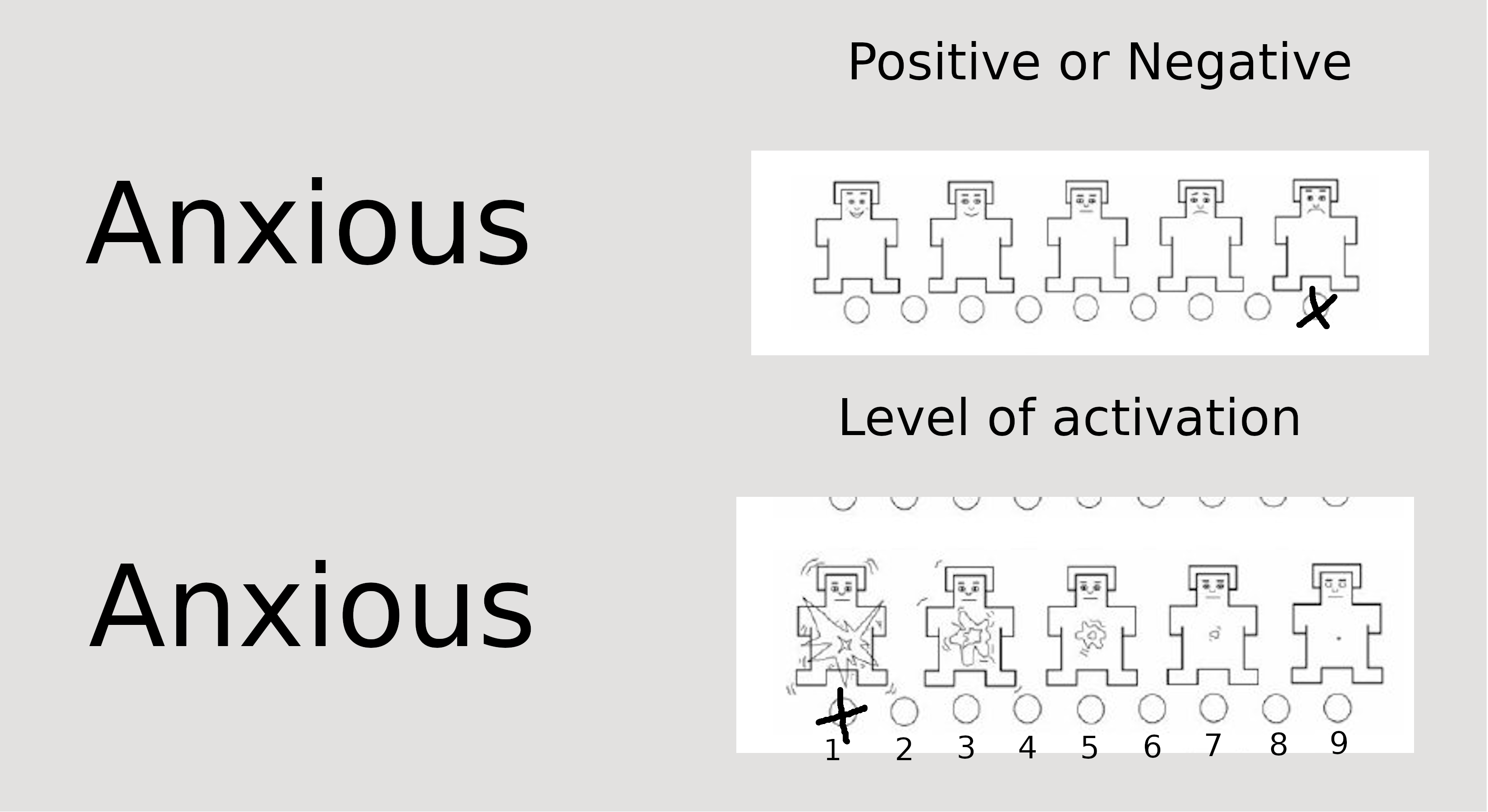

This is also a peculiar use of a Likert scale:

You may read more about this item in this presentation, CLICK HERE

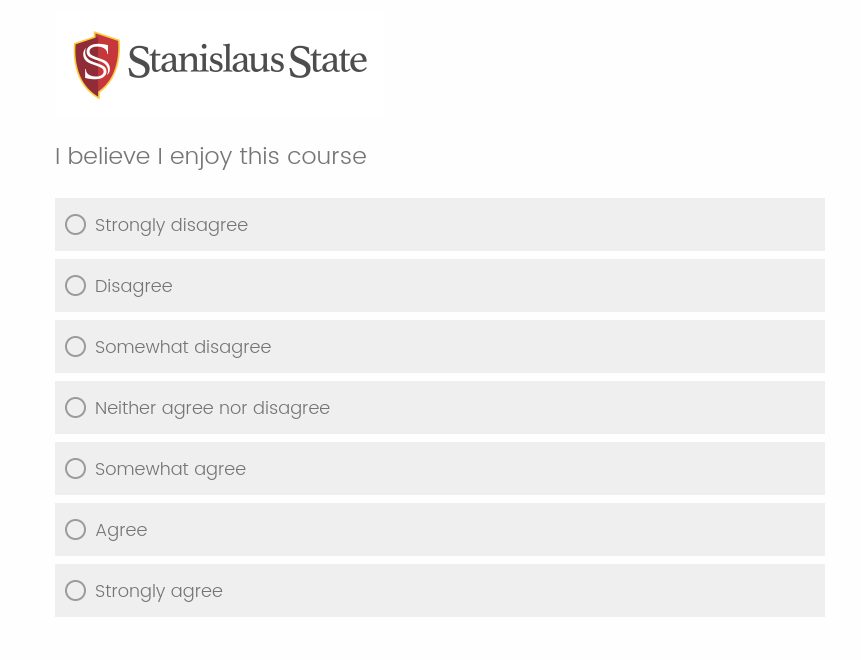

Likert Items: More examples

Likert Items: How many options?

- You will find several recommendations online regarding the number of response options in a Likert scale, in my experience if you add too many options, participants report problems selecting an option. However, this might depend on academic level.

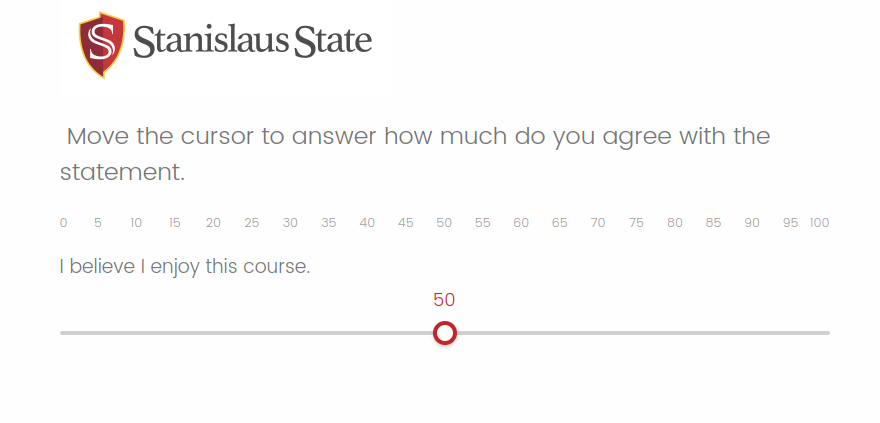

Visual Analog Scale (VAS)

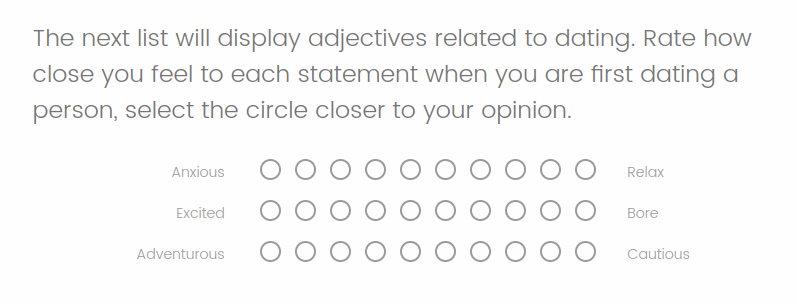

Semantic Differential

Item evaluation

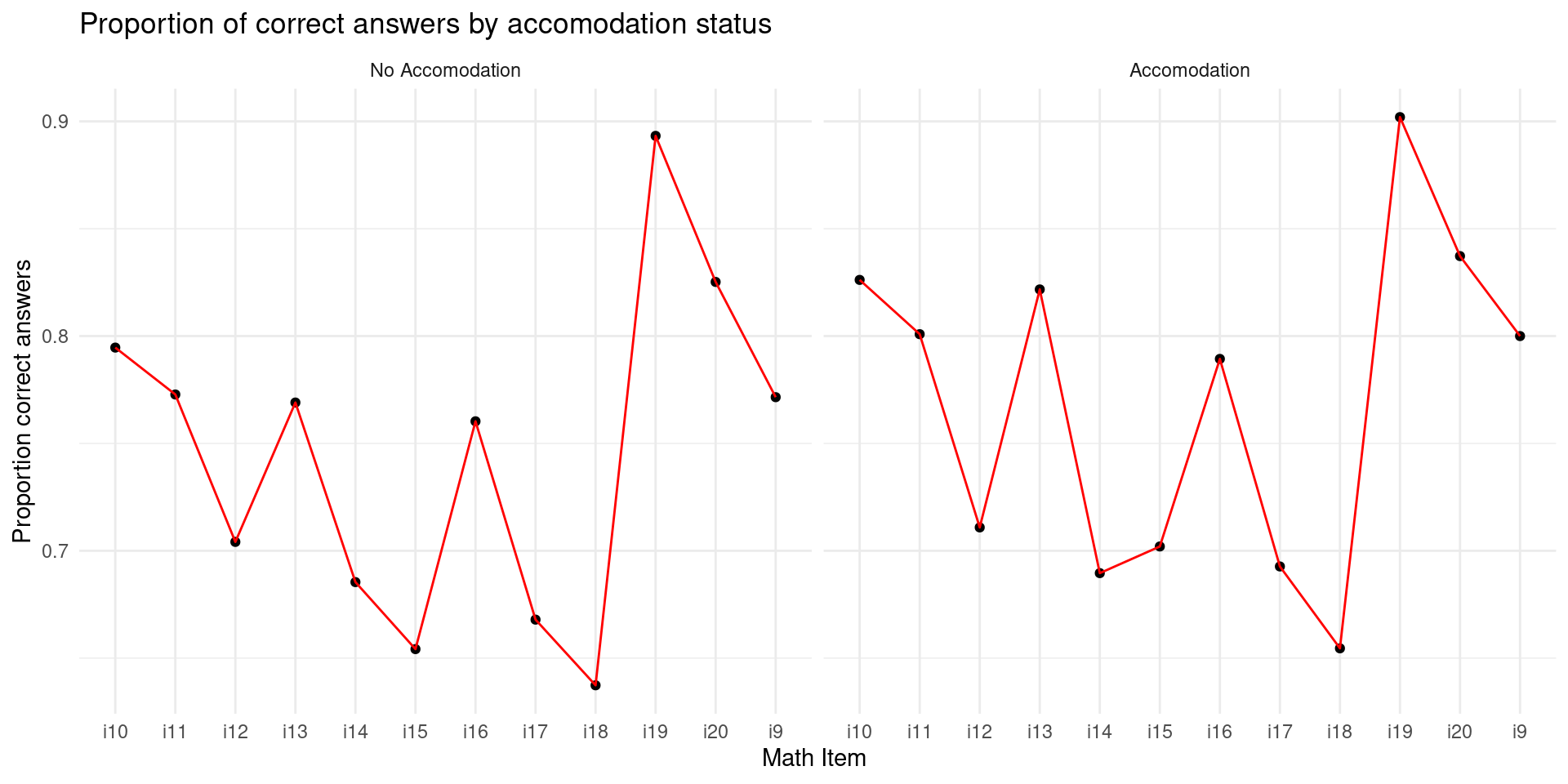

Item Difficulty

- Item difficulty is an estimate that is part of the Classical Test Theory (CTT).

- The idea is to evaluate how difficult is an item.

- We can estimate the proportion of correct/incorrect answers.

Item Discrimination

- Did people who answered an item right have a good overall performance in the test?

- We can estimate the correlation between the total score and each item:

Item Response Theory

IRT Basics

Item Response Theory (IRT) is a paradigm that answers some questions generated by the Classical Test Theory (CTT). It also helps to create more realistic and useful statistical models.

IRT will assume that there is a “latent trait” behind your items, then your items are goind to be explained by this latent trait.

Scale in IRT:

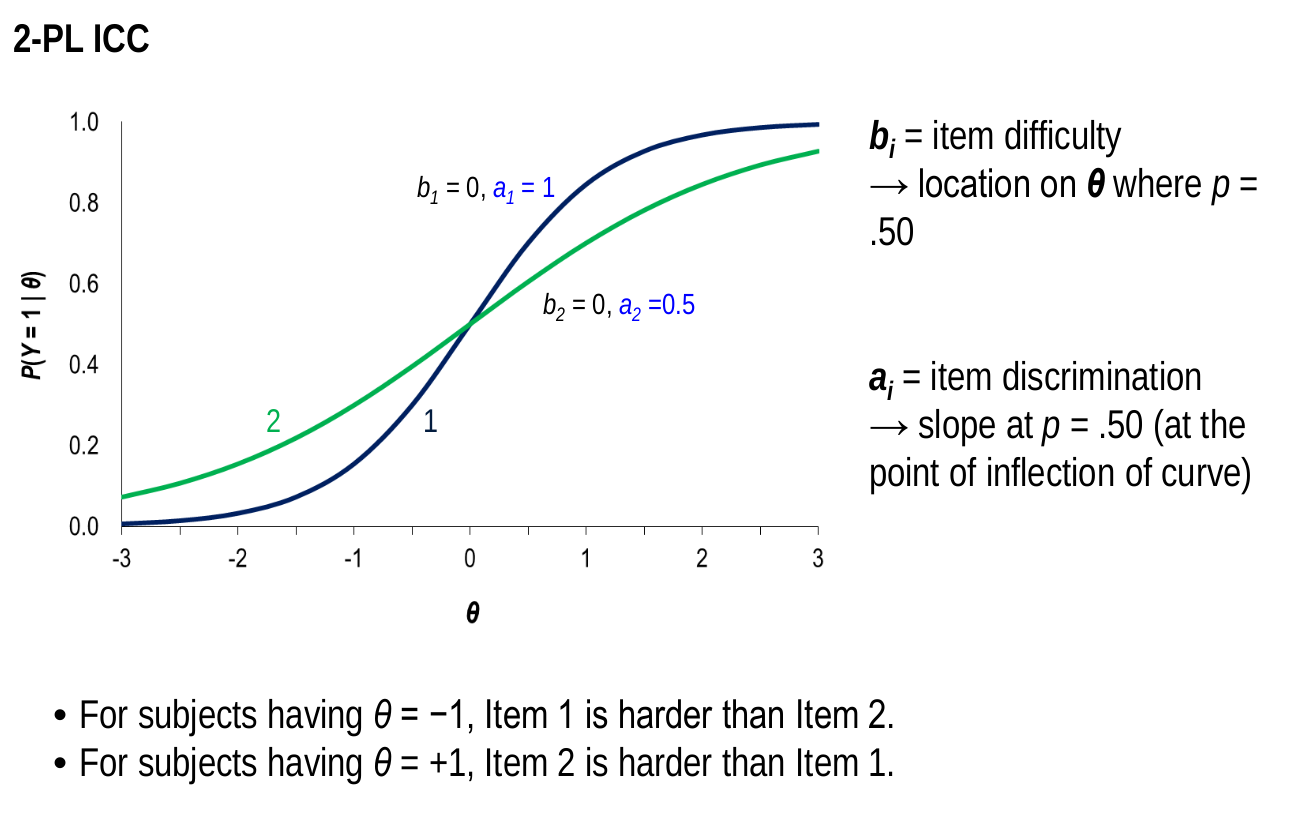

IRT Basics: 2-parameters model

IRT Basics: Item Characteristic Curve (ICC)

References